Notre intégration Snowflake vous permet de collecter des données complètes sur divers aspects, notamment les performances des requêtes, la santé du système de stockage, l'état de l'entrepôt et les informations de facturation.

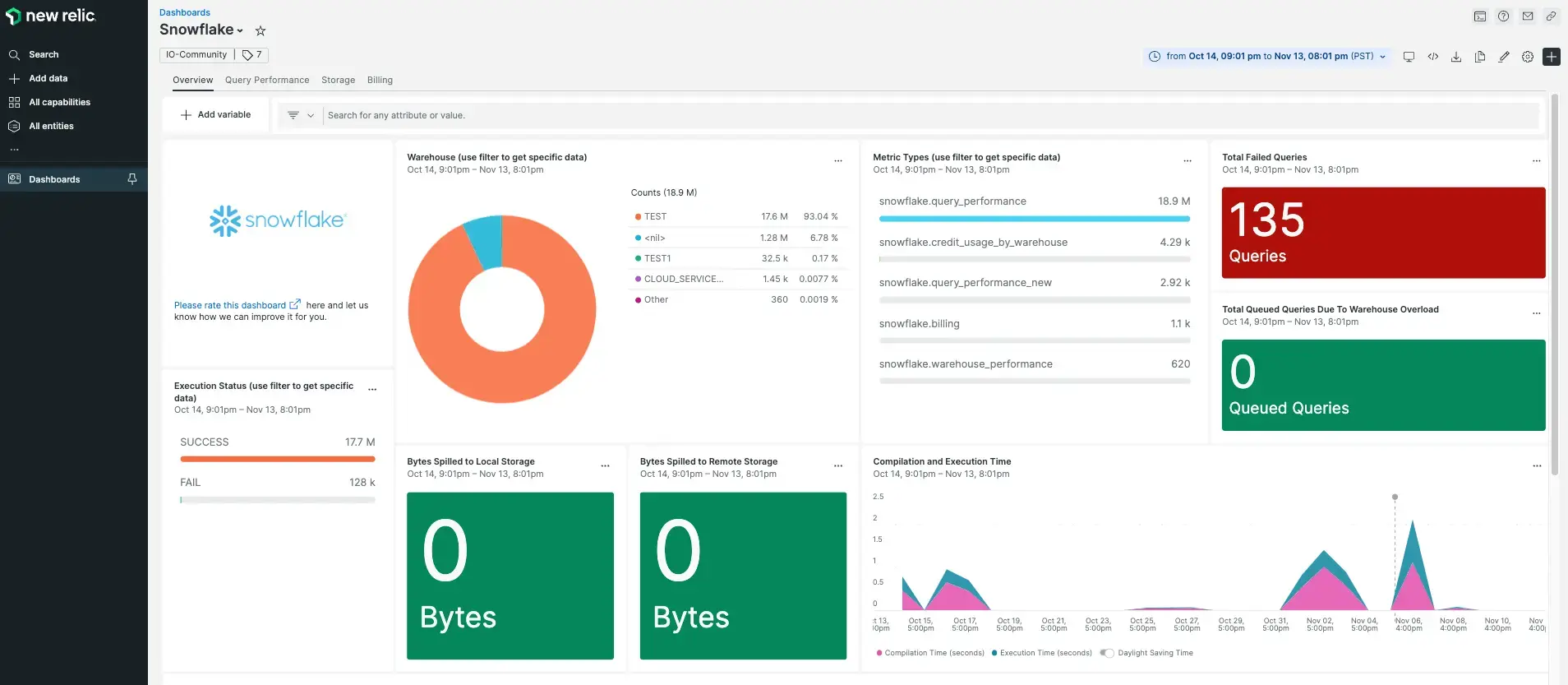

Après avoir configuré l'intégration de Snowflake avec New Relic, visualisez vos données dans un dashboard comme celui-ci, dès la sortie de la boîte.

Installer l' agent d'infrastructure

Pour utiliser l’intégration Snowflake, vous devez également installer l’agent d’infrastructure sur le même hôte. L'agent infrastructure monitore l'hôte lui-même, tandis que l'intégration que vous installerez à l'étape suivante étend votre monitoring avec des données spécifiques à Snowflake.

Configurer les métriques Snowflake

Exécutez la commande ci-dessous pour stocker les métriques Snowflake au format JSON, ce qui permet à nri-flex de les lire. Veillez à modifier ACCOUNT, USERNAME et SNOWSQL_PWD en conséquence.

$# Run the below command as a 1 minute cronjob$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT SERVICE_TYPE, NAME, AVG(CREDITS_USED_COMPUTE) AS "CREDITS_USED_COMPUTE_AVERAGE", SUM(CREDITS_USED_COMPUTE) AS "CREDITS_USED_COMPUTE_SUM", AVG(CREDITS_USED_CLOUD_SERVICES) AS "CREDITS_USED_CLOUD_SERVICES_AVERAGE", SUM(CREDITS_USED_CLOUD_SERVICES) AS "CREDITS_USED_CLOUD_SERVICES_SUM", AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."METERING_HISTORY" WHERE start_time >= DATE_TRUNC(day, CURRENT_DATE()) GROUP BY 1, 2;' > /tmp/snowflake-account-metering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, AVG(AVG_RUNNING) AS "RUNNING_AVERAGE", AVG(AVG_QUEUED_LOAD) AS "QUEUED_LOAD_AVERAGE", AVG(AVG_QUEUED_PROVISIONING) AS "QUEUED_PROVISIONING_AVERAGE", AVG(AVG_BLOCKED) AS "BLOCKED_AVERAGE" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_LOAD_HISTORY" GROUP BY 1;' > /tmp/snowflake-warehouse-load-history-metrics.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, avg(CREDITS_USED_COMPUTE) as "CREDITS_USED_COMPUTE_AVERAGE", sum(CREDITS_USED_COMPUTE) as "CREDITS_USED_COMPUTE_SUM", avg(CREDITS_USED_CLOUD_SERVICES) as "CREDITS_USED_CLOUD_SERVICES_AVERAGE", sum(CREDITS_USED_CLOUD_SERVICES) as "CREDITS_USED_CLOUD_SERVICES_SUM", avg(CREDITS_USED) as "CREDITS_USED_AVERAGE", sum(CREDITS_USED) as "CREDITS_USED_SUM" from "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_METERING_HISTORY" group by 1;' > /tmp/snowflake-warehouse-metering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT table_name, table_schema, avg(ACTIVE_BYTES) as "ACTIVE_BYTES_AVERAGE", avg(TIME_TRAVEL_BYTES) as "TIME_TRAVEL_BYTES_AVERAGE", avg(FAILSAFE_BYTES) as "FAILSAFE_BYTES_AVERAGE", avg(RETAINED_FOR_CLONE_BYTES) as "RETAINED_FOR_CLONE_BYTES_AVERAGE" from "SNOWFLAKE"."ACCOUNT_USAGE"."TABLE_STORAGE_METRICS" group by 1, 2;' > /tmp/snowflake-table-storage-metrics.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT STORAGE_BYTES, STAGE_BYTES, FAILSAFE_BYTES FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STORAGE_USAGE" ORDER BY USAGE_DATE DESC LIMIT 1;' > /tmp/snowflake-storage-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT USAGE_DATE, AVG(AVERAGE_STAGE_BYTES) FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STAGE_STORAGE_USAGE_HISTORY" GROUP BY USAGE_DATE;' > /tmp/snowflake-stage-storage-usage-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT DATABASE_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_AVERAGE", SUM(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."REPLICATION_USAGE_HISTORY" GROUP BY DATABASE_NAME;' > /tmp/snowflake-replication-usage-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT QUERY_TYPE, WAREHOUSE_NAME, DATABASE_NAME, SCHEMA_NAME, AVG(EXECUTION_TIME) AS "EXECUTION_TIME_AVERAGE", AVG(COMPILATION_TIME) AS "COMPILATION_TIME_AVERAGE", AVG(BYTES_SCANNED) AS "BYTES_SCANNED_AVERAGE", AVG(BYTES_WRITTEN) AS "BYTES_WRITTEN_AVERAGE", AVG(BYTES_DELETED) AS "BYTES_DELETED_AVERAGE", AVG(BYTES_SPILLED_TO_LOCAL_STORAGE) AS "BYTES_SPILLED_TO_LOCAL_STORAGE_AVERAGE", AVG(BYTES_SPILLED_TO_REMOTE_STORAGE) AS "BYTES_SPILLED_TO_REMOTE_STORAGE_AVERAGE" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."QUERY_HISTORY" GROUP BY QUERY_TYPE, WAREHOUSE_NAME, DATABASE_NAME, SCHEMA_NAME;' > /tmp/snowflake-query-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT PIPE_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(BYTES_INSERTED) AS "BYTES_INSERTED_AVERAGE", SUM(BYTES_INSERTED) AS "BYTES_INSERTED_SUM", AVG(FILES_INSERTED) AS "FILES_INSERTED_AVERAGE", SUM(FILES_INSERTED) AS "FILES_INSERTED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."PIPE_USAGE_HISTORY" GROUP BY PIPE_NAME;' > /tmp/snowflake-pipe-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT QUERY_ID, QUERY_TEXT, (EXECUTION_TIME / 60000) AS EXEC_TIME, WAREHOUSE_NAME, USER_NAME, EXECUTION_STATUS FROM "SNOWFLAKE"."ACCOUNT_USAGE"."QUERY_HISTORY" WHERE EXECUTION_STATUS = '\''SUCCESS'\'' ORDER BY EXECUTION_TIME DESC;' > /tmp/snowflake-longest-queries.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT EVENT_ID, EVENT_TIMESTAMP, EVENT_TYPE, REPORTED_CLIENT_TYPE, REPORTED_CLIENT_VERSION, FIRST_AUTHENTICATION_FACTOR, SECOND_AUTHENTICATION_FACTOR, IS_SUCCESS, ERROR_CODE, ERROR_MESSAGE FROM "SNOWFLAKE"."ACCOUNT_USAGE"."LOGIN_HISTORY" WHERE IS_SUCCESS = '\''NO'\'';' > /tmp/snowflake-login-failures.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT DATABASE_NAME, AVERAGE_DATABASE_BYTES, AVERAGE_FAILSAFE_BYTES FROM "SNOWFLAKE"."ACCOUNT_USAGE"."DATABASE_STORAGE_USAGE_HISTORY" ORDER BY USAGE_DATE DESC LIMIT 1;' > /tmp/snowflake-database-storage-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT SOURCE_CLOUD, SOURCE_REGION, TARGET_CLOUD, TARGET_REGION, TRANSFER_TYPE, AVG(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_AVERAGE", SUM(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."DATA_TRANSFER_HISTORY" GROUP BY 1, 2, 3, 4, 5;' > /tmp/snowflake-data-transfer-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, SUM(CREDITS_USED) AS TOTAL_CREDITS_USED FROM "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_METERING_HISTORY" GROUP BY 1 ORDER BY 2 DESC;' > /tmp/snowflake-credit-usage-by-warehouse.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT TABLE_NAME, DATABASE_NAME, SCHEMA_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(NUM_BYTES_RECLUSTERED) AS "BYTES_RECLUSTERED_AVERAGE", SUM(NUM_BYTES_RECLUSTERED) AS "BYTES_RECLUSTERED_SUM", AVG(NUM_ROWS_RECLUSTERED) AS "ROWS_RECLUSTERED_AVERAGE", SUM(NUM_ROWS_RECLUSTERED) AS "ROWS_RECLUSTERED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."AUTOMATIC_CLUSTERING_HISTORY" GROUP BY 1, 2, 3;' > /tmp/snowflake-automatic-clustering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'select USER_NAME,EVENT_TYPE,IS_SUCCESS,ERROR_CODE,ERROR_MESSAGE,FIRST_AUTHENTICATION_FACTOR,SECOND_AUTHENTICATION_FACTOR from "SNOWFLAKE"."ACCOUNT_USAGE"."LOGIN_HISTORY";' > /tmp/snowflake-account-details.jsonActiver l'intégration de Snowflake avec nri-flex

Pour configurer l’intégration Snowflake, suivez ces étapes :

Créez un fichier nommé

nri-snowflake-config.ymldans le répertoire d'intégration :bash$touch /etc/newrelic-infra/integrations.d/nri-snowflake-config.ymlAjoutez le snippet suivant à votre fichier

nri-snowflake-config.ymlpour permettre à l'agent de capturer les données Snowflake :---integrations:- name: nri-flexinterval: 30sconfig:name: snowflakeAccountMeteringapis:- name: snowflakeAccountMeteringfile: /tmp/snowflake-account-metering.json- name: nri-flexinterval: 30sconfig:name: snowflakeWarehouseLoadHistoryapis:- name: snowflakeWarehouseLoadHistoryfile: /tmp/snowflake-warehouse-load-history-metrics.json- name: nri-flexinterval: 30sconfig:name: snowflakeWarehouseMeteringapis:- name: snowflakeWarehouseMeteringfile: /tmp/snowflake-warehouse-metering.json- name: nri-flexinterval: 30sconfig:name: snowflakeTableStorageapis:- name: snowflakeTableStoragefile: /tmp/snowflake-table-storage-metrics.json- name: nri-flexinterval: 30sconfig:name: snowflakeStageStorageUsageapis:- name: snowflakeStageStorageUsagefile: /tmp/snowflake-stage-storage-usage-history.json- name: nri-flexinterval: 30sconfig:name: snowflakeReplicationUsgaeapis:- name: snowflakeReplicationUsgaefile: /tmp/snowflake-replication-usage-history.json- name: nri-flexinterval: 30sconfig:name: snowflakeQueryHistoryapis:- name: snowflakeQueryHistoryfile: /tmp/snowflake-query-history.json- name: nri-flexinterval: 30sconfig:name: snowflakePipeUsageapis:- name: snowflakePipeUsagefile: /tmp/snowflake-pipe-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeLongestQueriesapis:- name: snowflakeLongestQueriesfile: /tmp/snowflake-longest-queries.json- name: nri-flexinterval: 30sconfig:name: snowflakeLoginFailureapis:- name: snowflakeLoginFailurefile: /tmp/snowflake-login-failures.json- name: nri-flexinterval: 30sconfig:name: snowflakeDatabaseStorageUsageapis:- name: snowflakeDatabaseStorageUsagefile: /tmp/snowflake-database-storage-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeDataTransferUsageapis:- name: snowflakeDataTransferUsagefile: /tmp/snowflake-data-transfer-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeCreditUsageByWarehouseapis:- name: snowflakeCreditUsageByWarehousefile: /tmp/snowflake-credit-usage-by-warehouse.json- name: nri-flexinterval: 30sconfig:name: snowflakeAutomaticClusteringapis:- name: snowflakeAutomaticClusteringfile: /tmp/snowflake-automatic-clustering.json- name: nri-flexinterval: 30sconfig:name: snowflakeStorageUsageapis:- name: snowflakeStorageUsagefile: /tmp/snowflake-storage-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeAccountDetailsapis:- name: snowflakeAccountDetailsfile: /tmp/snowflake-account-details.json

Redémarrez l'agent New Relic Infrastructure

Redémarrez votre agent d’infrastructure.

$sudo systemctl restart newrelic-infra.serviceDans quelques minutes, votre application enverra des métriques à one.newrelic.com.

Trouvez vos données

Vous pouvez choisir notre modèle dashboard prédéfini nommé Snowflake pour monitorer les métriques de votre application Snowflake. Suivez ces étapes pour utiliser notre modèle dashboard prédéfini :

De one.newrelic.com, aller à la page + Add data .

Cliquez sur Dashboards.

Dans la barre de recherche, tapez

Snowflake.Le dashboard Snowflake devrait apparaître. Cliquez dessus pour l'installer

Votre dashboard Snowflake est considéré comme un dashboard personnalisé et peut être trouvé dans l'UI Dashboards. Pour obtenir des documents sur l'utilisation et la modification du dashboard, consultez notre documentation dashboard .

Voici une requête NRQL pour vérifier les métriques Snowflake :

SELECT * FROM snowflakeAccountSample

Quelle est la prochaine étape ?

Pour en savoir plus sur la création de requêtes NRQL et la génération de dashboards, consultez ces documents :

- Introduction au générateur de requêtes pour créer des requêtes basiques et avancées.

- Introduction au dashboard pour personnaliser votre dashboard et effectuer différentes actions.

- Gérez votre dashboard pour ajuster votre mode d'affichage du dashboard ou pour ajouter plus de contenu à votre dashboard.